Learned Dirty Paper Coding

NYU Wireless P.I.s

Research Overview

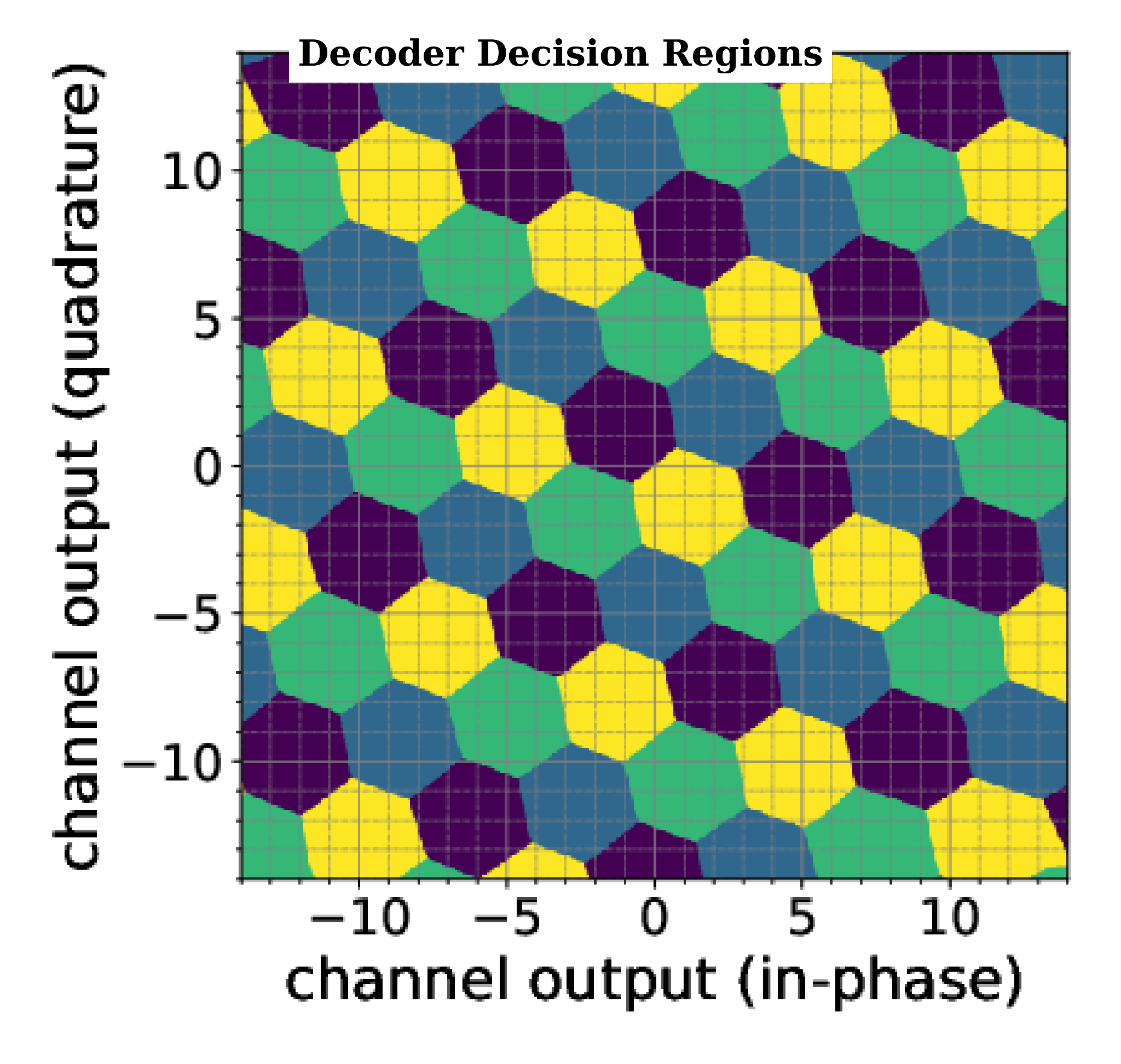

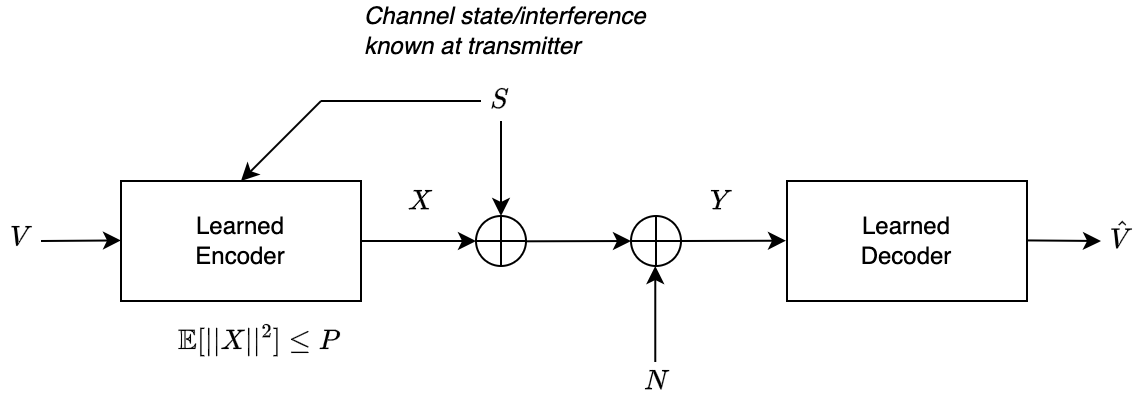

Dirty paper coding (DPC) is a classical problem in information theory that considers communication over channels with state information known only at the transmitter. While its theoretical significance is well established, practical implementations such as Tomlinson–Harashima and lattice-based precoding rely on specific modeling assumptions about the input, state, and channel. This project revisits the DPC problem through the lens of learning-based modeling, exploring whether data-driven approaches can complement traditional analytical designs.

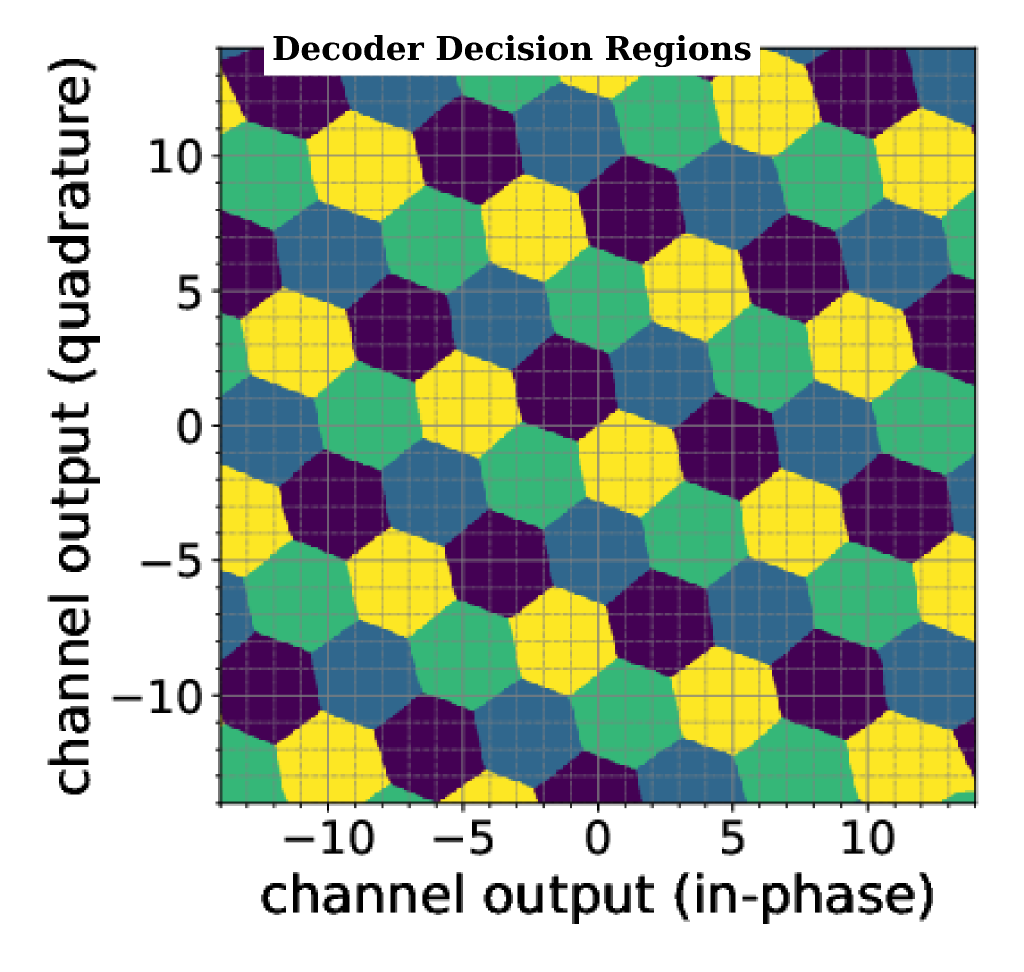

We develop a framework in which both the encoder and decoder are represented by neural networks that learn effective interference pre-cancellation directly from data, without requiring prior knowledge of the channel, state, or input statistics. The learned models recover characteristic features of well-known structured schemes while offering improved flexibility and robustness across operating conditions.

This work provides one of the first interpretable demonstrations of learning-based DPC, showing that neural models can reproduce and, in some regimes, surpass classical precoding performance. The broader goal is to understand how learning can be systematically integrated with information-theoretic design principles to realize practical and adaptive communication systems.

2026 Open House

2026 Open House 2025 Brooklyn 6G Summit — November 5-7

2025 Brooklyn 6G Summit — November 5-7 Sundeep Rangan & Team Receive NTIA Award

Sundeep Rangan & Team Receive NTIA Award