Theoretical approaches to compression under modern constraints

NYU Wireless P.I.s

Research Overview

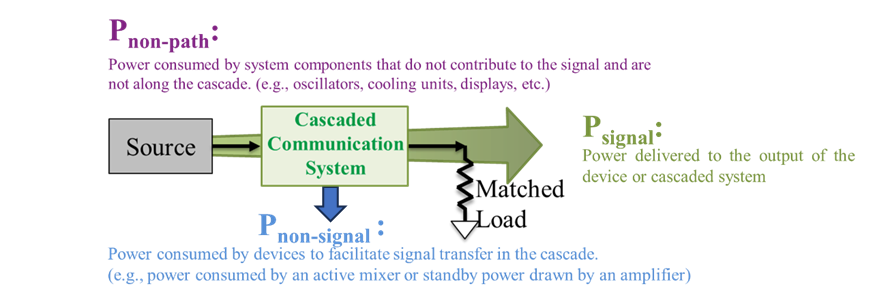

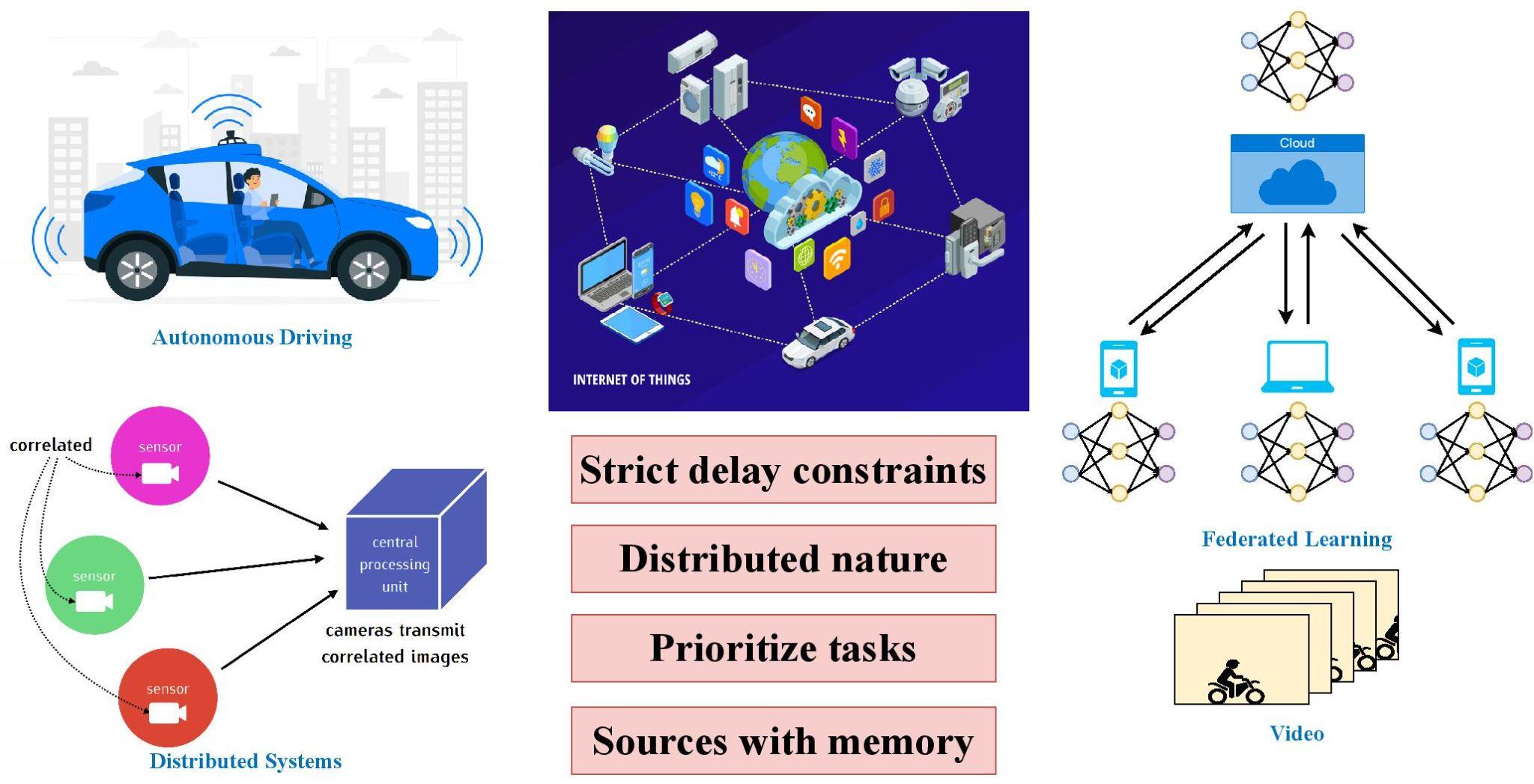

Classical compression, or source coding, focuses on accurately reproducing data from its compressed representation under assumptions of asymptotically large blocklengths, independent and identically distributed (i.i.d.) sources, and distortion measures aligned with reconstruction fidelity. These abstractions have led to elegant theoretical models that were highly successful in traditional communication and compression systems. However, as modern information systems increasingly integrate communication, computation, learning, and control, these classical assumptions become less representative of practical needs. Modern applications such as autonomous driving, the Internet of Things, distributed sensing, and federated learning often operate under strict delay constraints, involve sources with memory, function in distributed settings, and prioritize task performance over exact data reproduction.

Motivated by these emerging challenges, this work focuses on the theoretical foundations of compression under realistic constraints, including single-shot operation, sources with memory, distributed compression, and multi-task settings. We derive new bounds for a range of source coding problems under these conditions, with particular emphasis on the logarithmic loss (log-loss) distortion measure, which quantifies the quality of probabilistic predictions. Log-loss establishes elegant connections to fundamental information-theoretic quantities and the information bottleneck framework, offering a unified perspective that bridges compression and learning.

2026 Open House

2026 Open House 2025 Brooklyn 6G Summit — November 5-7

2025 Brooklyn 6G Summit — November 5-7 Sundeep Rangan & Team Receive NTIA Award

Sundeep Rangan & Team Receive NTIA Award