Coding and Streaming of Point Cloud Video

Research Overview

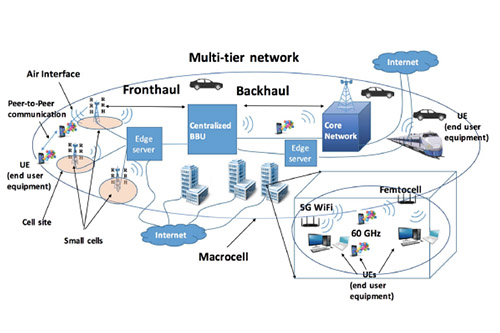

Volumetric video streaming will take telepresence to the next level by delivering full-fledged 3D information of the remote scene and facilitating six-degree-of-freedom viewpoint selection to create a truly immersive visual experience. With recent advances in the key enabling technologies, we are now at the verge of completing the puzzle of teleporting holograms of real-world humans/creatures/objects through the global Internet to realize the full potentials of Virtual/Augmented/Mixed Reality. Streaming volumetric video over the Internet requires significantly higher bandwidth and lower latency than the traditional 2D video; processing volumetric video also incurs high computation loads on the source and receiver sides.

We propose an inter-disciplinary research plan to holistically address the communication and computation challenges of point cloud video (PCV) by jointly designing coding, streaming, and edge processing strategies. We develop object-centric, view-adaptive, progressive, and edge-aware designs to deliver robust and high-quality viewer Quality-of-Experience (QoE) in the faces of network and viewer dynamics.

This research consists of four research thrusts:

- Develop efficient point cloud video coding schemes that facilitate rate adaptation and field of view (FoV) adaption

- Develop progressive streaming framework to gradually refine the spatial resolution of each region in the predicted FoV as its playback time approaches.

- Design edge PCV caching algorithms that work seamlessly with edge-based PCV post-processing.

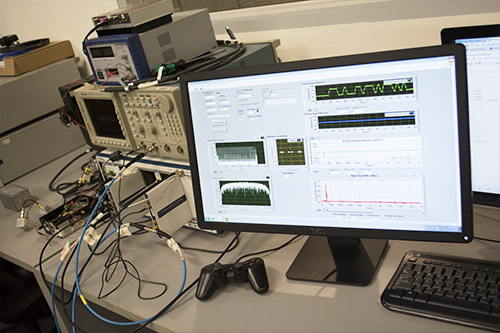

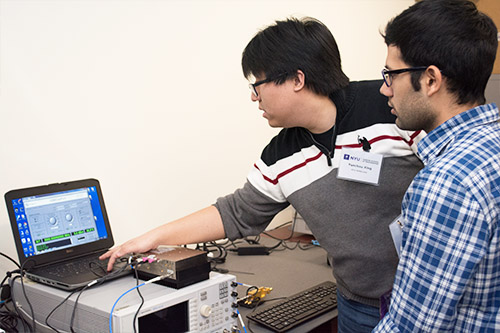

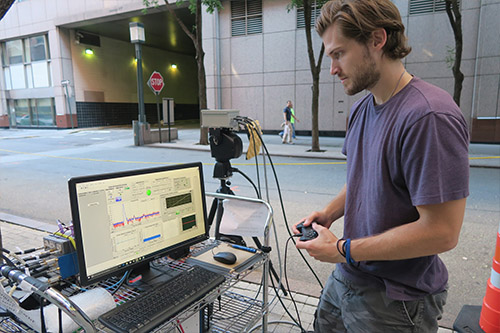

- Develop a fully-functional PCV streaming testbed and conduct modern dance education experiments by streaming PCVs of professional dancers to dance students in on-demand and live fashions.

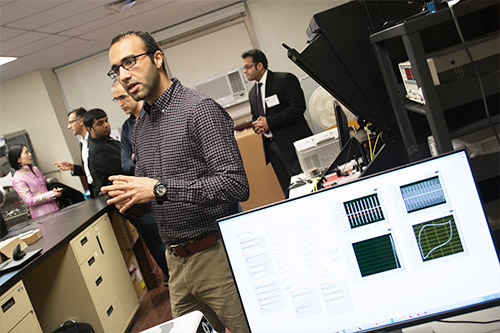

2026 Open House

2026 Open House 2025 Brooklyn 6G Summit — November 5-7

2025 Brooklyn 6G Summit — November 5-7 Sundeep Rangan & Team Receive NTIA Award

Sundeep Rangan & Team Receive NTIA Award